Thanks for everyone who had a go at my Geek Christmas Quiz. The response was fantastic with both Iain Holder and Rob Pickering sending me emails of their answers. I’m pretty sure neither of them Googled any of the questions, since their scores weren’t spectacular :)

So, now the post you’ve all been waiting for with such anticipation… the answers!

Computers

- G.N.U stands for GNU is Not Unix. A recursive acronym, how geeky is that?

- The A in ARM originally stood for ‘Acorn’ as in Acorn Risc Machine. Yes, I know it stands for ‘Advanced’ now, but the question said ‘originally’.

- TCP stands for Transmission Control Protocol.

- Paul Allen founded Microsoft with Bill Gates. I’ve just finished reading his memoirs ‘Ideas Man’. Hard work!

- F2 (hex) is 15(F) * 16 + 2 = 242. 1111 0010 (binary)

- Windows ME was based on the Balmer Peak theory of software development.

- The first programmer was Ada Lovelace. Yes yes, I know that’s contentious, but I made up this quiz, so there!

- UNIX time started in 1970 (1st January to be exact). I know this because I just had to write a System.DateTime to UNIX time converter.

- SGI, the mostly defunct computer maker. You get a mark for Silicon Graphics International (or Inc).

- Here’s monadic ‘Bind’ in C#: M<B> Bind<A,B>(M<A> a, Func<A, M<B>> func)

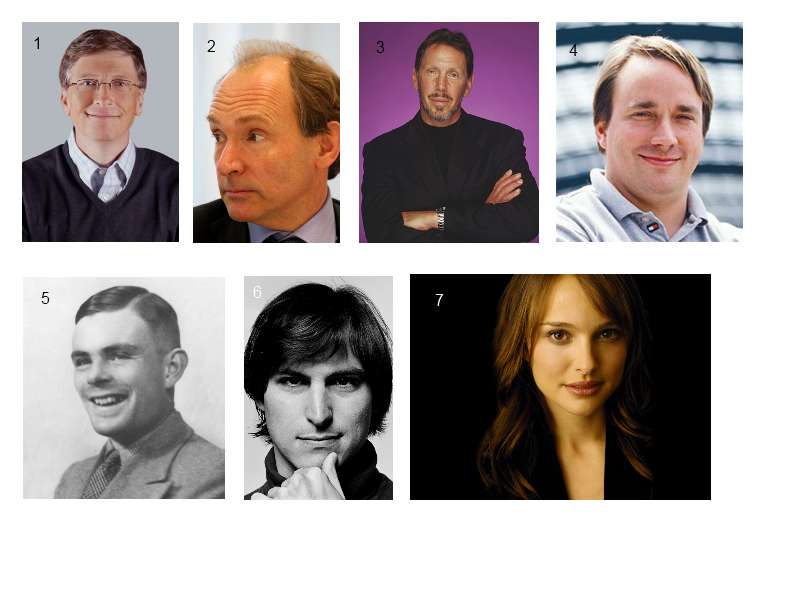

Name That Geek!

![[Name_that_geek%255B4%255D.png]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhIPUJAF4K5VvLtegdvXC0FR2MlrwRhL0bd4OQmIDozF1ozKqx9PAKDt-zquaS8honSKZ_ajuj4UzgkTkzdM4hTm58-EbwM-p2OaGo731ChohFd0AtNrLVOBDegM3IJUu8HH6i1fw/s1600/Name_that_geek%25255B4%25255D.png)

- Bill Gates – Co-founder of Microsoft with Paul Allen.

- Tim Berners-Lee – Creator of the World Wide Web.

- Larry Ellison – Founder of Oracle. Lives in a Samurai House (how geeky is that?)

- Linus Torvalds – Creator of Linux.

- Alan Turing – Mathematician and computer scientist. Described the Turing Machine. Helped save the free world from the Nazis.

- Steve Jobs – Founded Apple, NeXT and Pixar.

- Natalie Portman – Actress and self confessed geek.

Science

- The four ‘letters’ of DNA are C, G, T and A. If you know the actual names of the nucleotides (guanine, adenine, thymine, and cytosine), give yourself a bonus point – you really are a DNA geek!

- The ‘c’ in E = mc2 is a constant, the speed of light.

- The next number in the Fibonacci sequence 1 1 2 3 5 8 is 13 (5 + 8).

- C8H10N402 is the chemical formula for caffeine.

- According to Wikipedia, Australopithecus, the early hominid, became extinct around 2 million years ago.

- You would not find an electron in an atomic nucleus.

- Nitrogen is the most common gas in the Earth’s atmosphere.

- The formula for Ohm’s Law is I = V/R (current = voltage / resistance).

- A piece of paper that, when folded in half, maintains the ratio between the length of its sides, has sides with a length ratio of 1.618, ‘the golden ratio’. Did you know that the ratio between successive Fibonacci sequence numbers also tends to the golden ratio? Maths is awesome!

- The closest living land mammal to the cetaceans (including whales) is the Hippopotamus.

Space

- The second (and third) stage of the Apollo Saturn V moon rocket was powered by five J2 rocket engines.

- Saturn’s largest moon is Titan. Also the only moon in the solar system (other than our own) that a spaceship has landed on.

- You would experience 1/6th of the Earth’s gravity on the moon. Or there about.

- This question proved most contentious. The answer is false, there is nowhere in space that has no gravity. Astronauts are weightless because they are in free-fall. Gravity itself is a property of space.

- A Geosynchronous spaceship has an orbital period of 24 hours. So it appears to be stationary to a ground observer.

- The furthest planet from the sun is Neptune. Far fewer people know this, than know that Pluto used to be the furthest planet from the sun. Actually Pluto was only the furthest for part of it’s, irregular, orbit.

- There are currently 6 people aboard the International Space Station.

- According to Google (yes, I know) there are 13,000 earth satellites.

- Prospero was the only satellite built and launched by the UK. It was launched by stealth after the programme had been cancelled, that’s the way we do things in the UK.

- The second man on the moon was Buzz Aldrin. He’s never forgiven NASA.

Name That Spaceship!

![[Name_that_spaceship%255B4%255D.png]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhapy2vjmnb2jM89_PvW5jSM7n6hDTFBDVGTJuTBlfNvd5ADEg1RQZrQ-dnI5AQu-Idyhq_DhkYfxBGaN6lxPN4B1ipZyOsi2YYZ9hW0ofk7YvEthsJeQSJzSE-xlH1JN9mnL_UCQ/s1600/Name_that_spaceship%25255B4%25255D.png)

In this round, give yourself a point if you can name the film or TV series the fictional spacecraft appeared in.

- Red Dwarf. Sorry, you probably have to be British to get this one.

- Space 1999. Sorry, you really have to be British and over 40 to get this one … or a major TV space geek.

- Voyager. Difficult, interplanetary probes all look similar.

- Apollo Lunar Excursion Module (LEM). You can have a point for ‘Lunar Module’, but no, you don’t get a point for ‘Apollo’. Call yourself a geek?

- Skylab. The first US space station, made out of old Apollo parts. Not many people get this one. A read a whole book about it, that’s how much of a space geek I am.

- Darth Vader’s TIE fighter. You can have a point for ‘TIE Fighter’. You can’t have a point for ‘Star Wars’. Yes yes, I know I’m contradicting myself, but, come on, every geek should know this.

- Curiosity. No, no points for ‘Mars Rover’.

- 2001 A Space Odyssey. Even I don’t know what the ship is called.

- Soyuz. It’s been used by the Russians to travel into space since 1966. 46 years! It’s almost as old as me. Odd, when space travel is so synonymous with high-technology, that much of the hardware is actually ancient.

Geek Culture

- ‘Spooky’ Mulder was the agent in the X-Files, played by actor David Duchovny.

- Kiki is the trainee witch in ‘Kiki’s Delivery Service’, one of my favourite anime movies by the outstanding Studio Ghibli.

- The actual quote: “Humans are a disease, a cancer of this planet.” by Agent Smith. You can have a point for Virus or Cancer too. Thanks Chris for the link and clarification.

- Spiderman of course!

- “It’s a Banana” Kryten, of Red Dwarf, learns to lie.

- My wife, who is Japanese, translates ‘Otaku’ as ‘geek’. Literally it means ‘you’ and is used to describe someone with obsessive interests. An appropriate question for a geek quiz I think.

- The name R2D2 apparently came about when Lucas heard someone ask for Reel 2 Dialog Track 2 in the abbreviated form ‘R-2-D-2’. Later it was said to stand for Second Generation Robotic Droid Series 2, you can have a point for either.

- Clarke’s 3rd law states: “Any sufficiently advanced technology is indistinguishable from magic.”

- African or European? From Monty Python’s Holy Grail.

- Open the pod bay doors please HAL. 2001 A Space Odyssey. Or on acid here.

So there you are. I hope you enjoyed it, and maybe even learnt a little. I certainly did. I might even do it again next year.

A very Merry Christmas to you all!