This article discusses the relationship in software development between code organisation and social organisation. I discuss why software and teams do not scale easily, lessons we can learn from biology and the internet, and show how we can decouple software and teams to overcome scaling problems.

The discussion is based on my 20 years experience of building large software systems, but I’ve also been very impressed with the book Accelerate: The Science of Lean Software and DevOps: Building and Scaling High Performing Technology Organizations by Nicole Forsgren, Jez Humble and Gene Kim, which provides research data to back up most of the assertions that I make here. It’s a highly recommended read.

Software and software teams do not scale.

It’s a very common story, the first release of a product, perhaps written by one or two people, often seems remarkably easy. It might only provide limited functionality, but it is written quickly and fulfils the customer’s requirements. Customer communication is great because the customer is usually in direct communication with the developers. Any defects are quickly fixed and new features can be added quite painlessly. After a while though the pace slows. Version 2.0 takes a little longer than expected, it’s harder to fix bugs and new features don’t seem to come out quite so easily. The natural response to this is to add new developers to the team, yet each extra person added to the team seems to reduce productivity. As the software ages and grows in complexity it appears to atrophy. In extreme cases, organizations can find themselves running on software that’s hugely expensive to maintain and that it seems almost impossible to change. There are negative scale effects. The problem is that you don’t have to make ‘mistakes’ for this to happen, it’s so common that one could almost say that it’s a ‘natural’ property of software.

Why is this? There are two reasons, code related and team related. Neither code nor teams scale well.

As a codebase grows, it becomes harder for a single person to understand. There are fixed human cognitive limits and while it is possible for a single individual to maintain a mental model of the details of a small system, once it gets past a certain size, it grows larger than the cognitive range of a single person. Once a team grows past five or more people it’s almost impossible for one person to stay up to speed with how all parts of the system work. When no one person understands the complete system, fear reigns. In a tightly coupled large system it’s very hard to know the impact of any significant change since the consequences are not localised. Developers learn to work in a minimum-impact style of work-arounds and duplication rather than factoring out commonalities and creating abstractions and generalisations. This feeds back into system complexity, further amplifying these negative trends. Developers stop feeling any ownership of code they don’t really understand and are reluctant to refactor. Technical debt increases. It also makes for unpleasant and unsatisfying work and encourages ‘talent evaporation’, where the best developers, those who can more easily find work elsewhere, move on.

Teams also don’t scale. As the team grows, communication gets harder. The simple network formula comes into play:

c = n(n-1)/2

(where n is the number of people and c is the number of communication channels)

| Number of team members | Number of communication channels |

| 1 | 0 |

| 2 | 1 |

| 5 | 10 |

| 10 | 45 |

| 100 | 4950 |

The communication and coordination needs of the team rise geometrically as the team size increases. It’s very hard for a single team to stay a coherent entity over a certain size and the natural human social tendency to split into smaller groups will lead to informal sub-groups forming even if there is no management input. Peer level communication becomes difficult and will naturally be replaced by emergent leaders and top-down communication. Team members change from being equal stakeholders in the system to directed workers. Motivation suffers and there is a lack ownership driven by the diffusion of responsibility effect.

Management often intervenes at this stage and formalises the creation of new teams and management structures to organize them. But whether formal or informal, larger organisations find it hard to keep people motivated and actively engaged.

It’s typical to blame poorly skilled developers and bad management for these scaling pathologies, but that’s unfair, scale issues are a ‘natural’ property of growing and aging software, it’s what always happens unless you spot the problem early, recognise the inflexion point and work very hard to mitigate it. Software teams are constantly being created, the amount of software in the world is constantly growing and most software is small scale, so it’s quite common for a successful and growing product to have been created by a team that has no experience of large-scale software development. Expecting them recognise the inflexion point when the scale issues start to bite and to know what to do about it is unrealistic.

Scaling lessons from nature

I recently read Geoffrey West’s excellent book Scale. He talks about the mathematics of scale in biological and social-economic systems. His thesis is that all large complex systems obey fundamental scaling laws. It’s a fascinating read and very much recommended. For the purposes of this discussion I want to focus on his point that many biological and social systems scale amazingly well. Take the basic mammal body plan. We share the same cell types, bone structure, nervous and circulatory system of all mammals. Yet the difference in size between a mouse and a blue whale is around 10^7. How does nature use the same fundamental materials and plans for organisms of such hugely different scales? The answer appears to be that evolution has discovered fractal branching networks. This can be seen quite obviously if you consider a tree. Each small part of the tree looks like a small tree. The same is true for our mammalian circulatory and nervous systems, they are branching fractal networks where a small part of your lungs or blood vessels looks like a scaled down version of the whole.

Can we take these ideas from nature and apply them to software? I think there are important lessons that we can learn. If we can build large systems which have smaller pieces that look like complete systems themselves, then it might be possible to contain the pathologies that affect most software as it grows and ages.

Are there existing software systems that scale many orders of magnitude successfully? The obvious answer is the internet, a global software system of millions of nodes. Subnets do indeed look and work like smaller versions of the whole internet.

Attributes of decoupled software.

The ability to decouple software components from the larger system is the core technique for successful scaling. The internet is fundamentally a decoupled software architecture. This means that each node, service or application on the network has the following properties:

-

Obeys a shared communication protocol.

-

Only shares state via a clear contract with other nodes.

-

Does not require implementation knowledge to communicate.

-

Versioned and deployed independently.

The internet scales because it is a network of nodes that communicate over a set of clearly defined protocols. The nodes only share their state via the protocol, the implementation details of one node do not need to be understood by the nodes communicating with it. The global internet is not deployed as a single system, each node is separately versioned and deployed. Individual nodes come and go independently of each other. Obeying the internet protocol is the only thing that really matters for the system as a whole. Who built each node, when is created or deleted, how it’s versioned, what particular technologies and platforms it uses are all irrelevant to the internet as a whole. This is what we mean by decoupled software.

Attributes of decoupled teams.

We can scale teams by following the similar principles:

-

Each sub-team should look like a complete small software organization.

-

The internal processes and communication of the team should not be a concern outside the team.

-

How the team implements software should not be important outside the team.

-

Teams should communicate with the wider organisation about external concerns: common protocols, features, service levels and resourcing.

Small software teams are more efficient than large ones, so we should break large teams into smaller groups. The lesson from nature and the internet is that the sub-teams should look like a single, small software organisations. How small? Ideally one to five individuals.

The point that each team should look like a small independent software organisation is important. Other ways of structuring teams are less effective. It’s often tempting to split up a large team by function, so we have a team of architects, a team of developers, a team of DBAs, a team of testers, a deployment team and an operations team, but this solves none of the scaling problems we talked about above. A single feature needs to be touched by every team, often in an iterative fashion if you want to avoid waterfall style project management - which you do. The communication boundaries between these functional teams become a major obstacle to effective and timely delivery. The teams are not decoupled because they need to share significant internal details in order to work together. Also the interests of the different teams are not aligned: The development team is usually rewarded for feature delivery, the test team for quality, the support team for stability. These different interests can lead to conflict and poor delivery. Why should the development team care about logging if they never have to read the logs? Why should the test team care about delivery when they are rewarded for quality?

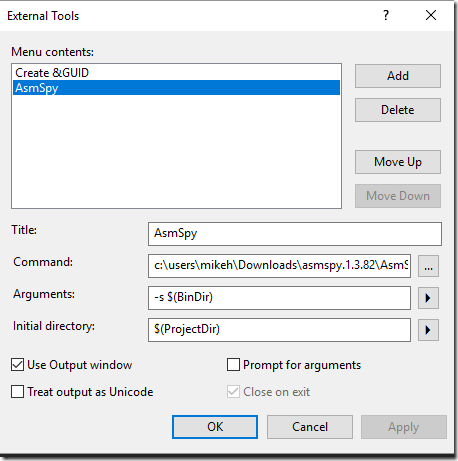

Instead we should organise teams by decoupled software services that support a business function, or a logical group of features. Each sub-team should design, code, test, deploy and support their own software. The individual team members are far more likely to be generalists than specialists because a small team will need to share these roles. They should focus on automating as much of the process as possible: automated tests, deployment and monitoring. Teams should choose their own tools and decide for themselves how to architect their systems. While the organizational protocols that the system uses to communicate must be decided at an organization level, the choice of tools used to implement the services should be delegated to the teams. This very much aligns with a DevOps model of software organization.

The level of autonomy that a team has is a reflection of the level of decoupling from the wider organization. Ideally the organization should care about the features, and ultimately business value, that the team provides, and the cost of resourcing the team.

The role of the software architect is important in this style of organisation. They should not focus on the specific tools and techniques that teams use, or micro-manage the internal architecture of the services, instead they should concentrate on the protocols and interactions between the various services and the health of the system as a whole.

Inverse Conway: software organisation should model the target architecture.

How do decoupled software and decoupled teams align? Conway’s Law states that:

"organizations which design systems ... are constrained to produce designs which are copies of the communication structures of these organizations."

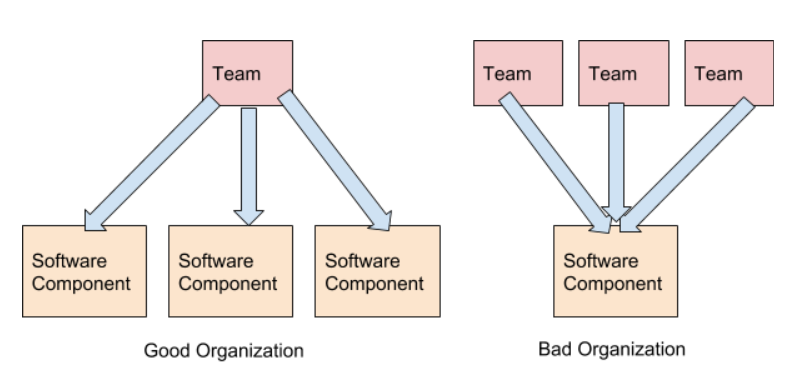

This is based on the observation that the architecture of a software system will reflect the team structure of the organization that creates it. We can ‘hack’ Conway’s law by inverting it; organize our teams to reflect our desired architecture. With this in mind we should align our decoupled teams with our decoupled software components. Should this be a one-to-one relationship? I think this is ideal, although it seems that it’s fine for a single small team to deliver several decoupled software services. I would argue that the scaling inflexion point for teams is larger than that for software, so this style of organisation seems valid. However, it’s important that the software components should remain segregated with their own version and deployment story even if some share the same team. We would like to be able to split the team if it grows too large, and being able to hand off various services to different teams would be a major benefit. We can’t do that if the services are tightly coupled or share process, versioning or deployment.

We should avoid having multiple teams work on the same components, this is an anti-pattern and is in some ways worse than having a single large team working on an oversize single codebase because the communication barriers between the teams leads to even worse feelings of lack-of-ownership and control.

The communication requirements between decoupled teams building decoupled software are minimised. Taking the example of the internet again, it’s often possible to use an API provided by another company without any direct communication if the process is simple and documentation sufficient. The communication should not require any discussion of software process or implementation, that is internal to the team, instead communication should be about delivering features, service levels, and resourcing.

An organisation of decoupled software teams building decoupled software should be easier to manage than the alternatives. The larger organization should focus on giving the teams clear goals and requirements in terms of features and service levels. The resource requirements should come from the team, but can be used by the organization to measure return on investment.

Decoupled Teams Building Decoupled Software

Decoupling software and teams is key to building a high performance software organisation. My anecdotal experience supports this view. I’ve worked in organisations where teams were segregated by software function or software layer or even where they’ve been segregated by customer. I’ve also worked in chaotic large teams on a single codebase. All of these suffer from the scaling problems discussed above. The happiest experiences were always where my team was a complete software unit independently building, testing and deploying decoupled services. But you don’t have to rely on my anecdotal evidence, the book Accelerate (described above), has survey data to support this view.