As the Chinese curse has it, ‘May you live in interesting times’. These are certainly interesting times for the UK’s public sector. The coalition government has stated that it intends to cut non-health public services by 25 to 40% - the biggest cuts since the Second World War. They also say that most of these cuts can be had by efficiency savings. I personally think that they can cut that much from public sector IT and leave it healthier, but it needs to be done in the right way.

In the private sector money rules. Capitalism is an ongoing process of Schumpeterian creative destruction as firms are created, compete for profits and die. Survival depends on firms cost-cutting and innovating. The public sector exists in an entirely different context. How do you create a public sector framework that incentivises efficiency and encourages innovation, and more importantly, allows for failure? This has been a question for every government since the invention of taxes.

Well, there is an evolving self-organising system, not based on a monetary economy that often beats Capitalism at its own game. It produces more variety, better quality and out-innovates. It is, of course, the world of open-source software (OSS), one of the most profound and utterly unexpected outcomes of the internet. What is surprising is that it hasn’t been studied in more detail by policy wonks. I believe that public sector organisations could be transformed by learning lessons from OSS, and this especially applies to public sector IT.

Here’s what the government should do:

Every business is a software business

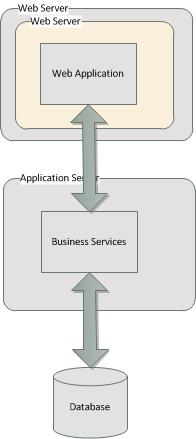

A common refrain you hear from managers, not just in the public sector, but they say it more than others, is, “We are not a software business”. Wrong. Every business in the 21st century is a software business. Much of the work of the public sector is information management: tax collection, benefits, regulation, health records, the list goes on. This work has IT at its core.

Unfortunately the prevailing trend has been to outsource software development and infrastructure. The UK civil service has gone from being a focus of expertise to being largely hollowed out. This needs to change. The civil service needs to put expertise in IT at its core. In the same way that the government is putting doctors back in charge of the NHS, it needs to put geeks in charge of IT.

Build IT Skills in the civil service

The civil service should work hard at becoming a major centre of UK IT skills. That means bringing the majority of IT expertise back in house and fostering a culture that makes the civil service a place where geeks want to work. Simple things like dress codes are important. There is simply no reason why a programmer should not be allowed to come to work in a t-shirt, shorts and flip-flops. In fact there’s no real reason for them to come to work at all – there is no need for people to be physically co-located in 2010. Insisting on this severely limits the pool of candidates. Public sector managers need to understand what motivates knowledge workers and handle them appropriately.

There also has to be a career path for developers that allows great coders to stay being great coders and not have to become managers. The UK IT workforce is warped. Because coding skills are undervalued, salaries tend to be low compared to jobs that require a similar level of expertise. This means that programmers almost always join the contract market at some point in their career, simply because it better reflects the real supply and demand for their skills. Many teams reflect this with the junior members being predominantly permanent staff and the senior ones being contractors. This is a problem, because permanent staff in are almost impossible to fire. When cost cutting is demanded, the contractors are laid off first. This guts the organisation of its core skills.

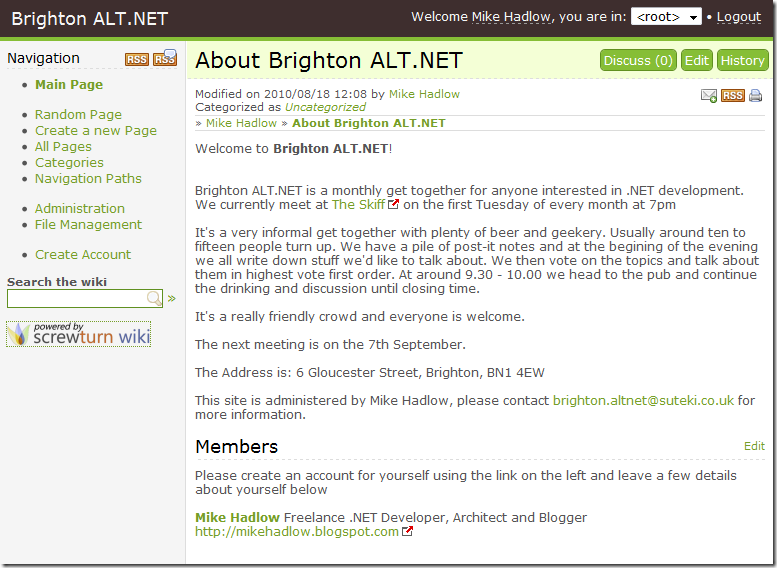

So pay rates for programmers need to be higher. But how do you know if a programmer is good enough to be paid these new high rates? There are no realistic qualifications in IT. I believe that a reputational system based on OSS is the best way to judge a programmer’s quality. It seems natural that a contributor to a successful, high-profile system should be valued more than someone without any demonstrable experience. Open-sourcing government IT would automatically build a transparent reputational market for IT skills.

Fix procurement

IT procurement in the public sector is broken. The seemingly laudable aim of regulating and monitoring procurement, especially of high value contracts has put a huge barrier in front of businesses that wish to supply the public sector. This distorts markets and encourages rent seeking behaviour. Only larger companies with deep pockets are able to afford the lengthy process of bidding for public sector contracts. And of course, once you’ve spent millions on securing a contract, you expect some payback. This leads to hugely inflated costs for software. Not only that, but the main effort and expertise of suppliers is focused on pre-sales and contract negotiation, once the contract is signed you often find that more junior managers and technicians take over.

As a freelance developer I have been hired several times by large suppliers to work on teams developing software for the public sector. There is no secret ingredient that they have. Often the project management and in-house development skills are poor to say the least. In many cases the team building the software is almost entirely freelancers, in which case the end-client could have hired them directly at a fraction of the cost, and probably experienced less friction in the process.

The outsourcing trend means that civil servants with little software development experience themselves have to manage contractual relationships with suppliers. They don’t know what questions to ask. They often have little idea of the cost of particular features or applications and have been acclimatised by suppliers to expect large numbers. Before long you have the situation where a few tens of thousands of pounds for some fields to be changed seems perfectly reasonable. I kid you not, I’ve seen it with my own eyes.

It’s not only bespoke software that suffers this pathology, it almost always applies to large COTS (Commercial Off The Shelf) procurements too. They are almost never wholly ‘off the shelf’, there’s always some degree of customisation required and the cost of customising a shrink wrapped product is always more than modifying your own internally built software.

Embrace open source

Because of the lack of internal IT skills and the corresponding reliance on external suppliers, there is a very negative attitude to open source software in the public sector. Of course you, dear reader, know full well that many open source software products are not only much cheaper (as in free) than their commercial equivalents, but are also often better quality. But without the internal expertise to evaluate them your only option is to listen to salesmen from commercial suppliers, and that is pretty much how most products get chosen in the public sector.

A civil service skilled in IT would be able to not only evaluate open source products, but also contribute to them and instigate new ones. The network effects would be significant and would have the potential to turn the UK into a world leader in open source development. Applications, infrastructure and innovations could be far more readily shared between departments, but it would also be a way for tax pounds to directly contribute to wider economy. Indeed, it only seems fair that a product produced at the taxpayer’s expense should be available to the taxpayer to use.

It should be policy to only consider a commercial product if it can be demonstrated that no practical open source alternative exists. Where there is no existing open source alternative, the first consideration should be to extend an existing project or create a new one from scratch. All software developed by the public sector should be open source by default. Only where there are demonstrable security concerns should this not be done.

By building software in the open, public sector organisations will be able to learn from each other in a real and direct way. This means being public about failures as well as successes. In the same way that I know the names of the developers of NHibernate and the Castle Project (two very successful open source projects), the names and reputations of the technologists and managers behind successful public sector projects will also be well known. When an organisation is considering a major new build they will know who to turn to, not on the basis of slick presentations, but on real measurable results.

An example of successful in-house development

I’ve just finished a very pleasant year working as a technical-architecture consultant with the UK’s Pensions Regulator (TPR) which is part of the Department for Work and Pensions. It is public knowledge that this department has been asked to prepare estimates for 25% to 40% cuts.

TPR’s work as a regulator is predominantly casework based. They monitor UK private sector pensions, risk-assess them, and provide advice to trustees. The case management process has been handled historically using a combination of off-the-shelf software products, spreadsheets and word documents. A dedicated case-management system was desperately needed.

Now you might think that you can buy a case-management system off the shelf, and yes, you can. But you can’t buy one specialised for managing pension regulation. Any product would have to be deeply customised. It would also need to be integrated with the rest of TPR’s systems to be effective. Some effort was expended looking at commercial alternatives, most with costs of around £100,000+. This does not include the customisations, which would, of course, have to be carried out by the supplier. The cost of the consultancy work could only be estimated from detailed specifications, the creation of which would have imposed considerable extra cost to TPR. There is little doubt that the internal and consultancy costs would have easily outweighed the ticket price.

But luckily TPR, for historical reasons, has a strong internal software development team. So after some debate, it was decided to build a bespoke case-management system from scratch. This took a team of two developers around 7 weeks to complete with a conservatively estimated cost of around £30,000. That’s right, building bespoke software in-house was at most 30% of the cost of using an external supplier. Because the system was built in-house there was no need for lengthy contract negotiations and detailed specifications, instead we built it incrementally with constant input from the case management team. They got exactly what they needed at a fraction of the cost.

Going forward, there won’t be any need for expensive maintenance contracts and huge costs for each change or refinement, because it can all be done in-house. Of course TPR need to maintain their development team, but I firmly believe that those guys are a lot cheaper than the outsourced alternative.

It’s a piece of software that I was very proud to have been a part of. It would have been useful to other public (and private) sector organisations, not simply as a casework system, but as a demonstration of a particular technology stack and methodology. It would also have been an excellent advertisement for the technical prowess of the team. It’s a real shame that this great success story can’t be told outside the confines of TPR. You will never get to see the beautifully factored code with over 1500 unit tests, or know the names of the people who put it together.

So, Mr Cameron, here’s something truly radical you could do that fits wholeheartedly with your aims for a more open and inclusive government:

open source it!