I’ve been writing a high-level ‘architectural vision’ document for my current clients. I thought it might be nice to republish bits of it here. This is part 2. The first part is here.

My Client has a core product that is heavily customised for each customer. In this post we look at the different kinds of components that make up this architecture. How some are common services that any make up the core product, and how other components might be bespoke pieces for a particular customer. We also examine the difference between workflow, services and endpoints.

It is very important that we make a clear distinction between components that we write as part of the product and bespoke components that we write for a particular customer. We should not put customer specific code into product components and we should not replicate common product code in customer specific pieces.

Because we are favouring small single-purpose components over large multi-purpose monolithic applications, it should be easy for us to differentiate between product and customer pieces.

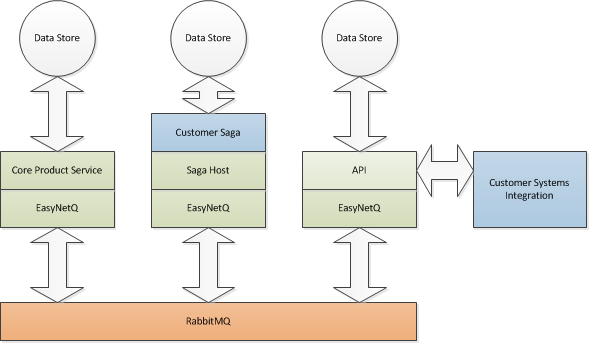

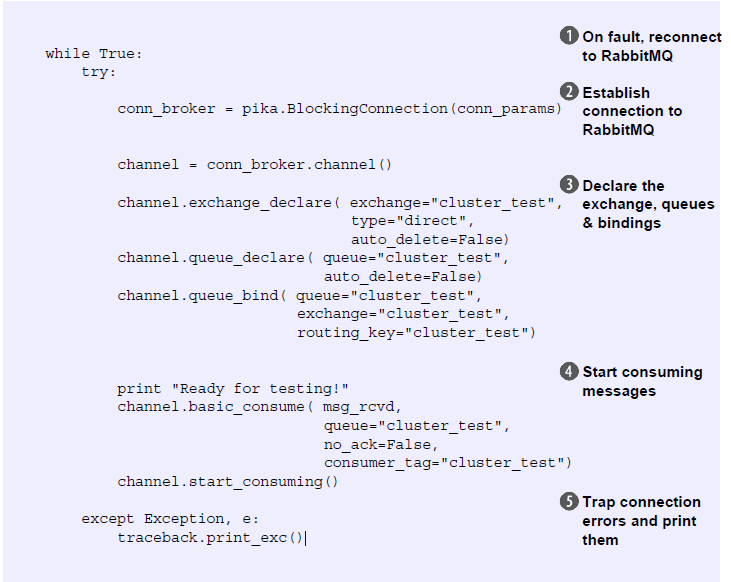

There are three main kinds of components that make up a working system. Services, workflow and endpoints. The diagram below shows how they communicate via EasyNetQ, our open-source infrastructure layer. The green parts are product pieces. The blue parts are bespoke customer specific pieces.

Services

Services are components that implement a piece of the core product. An example of a service is a component called Renderer that takes templates and data and does a kind of mail-merge. Because Renderer is a service it should never contain any client specific code. Of course customer requirements might mean that enhancements need to be made to Renderer, but these enhancements should always be done with the understanding that Renderer is part of the product. We should be able to deploy the enhanced Renderer to all our customers without the enhancement affecting them.

Services (in fact all components) should maintain their own state using a service specific database. This database should not be shared with other services. The service should communicate via EasyNetQ with other services and not use a shared database as a back-channel. In the case of an updated Renderer, templates would be stored in Renderer’s local database. Any new or updated templates would arrive as messages via EasyNetQ. Each data item to be rendered would also arrive as a message, and once the data has been rendered, the document should also be published via EasyNetQ.

The core point here is that each service should have a clear API, defined by the message types that it subscribes to and publishes. We should be able to fully exercise a component via messages independently of other services. Because the service’s database is only used by the service, we should be able to flexibly modify its schema in response to changing requirements, without having to worry about the impact that will have on other parts of the system.

It’s important that services do not implement workflow. As we’ll see in the next section, a core feature of this architecture is that workflow and services are separate. Render, for example, should not make decisions about what happens to a document after it is rendered, or implement batching logic. These are separate concerns.

Workflow

Workflow components are customer specific components that describe what happens in response to a specific business trigger. They also implement customer specific business rules. For an airline, an example would be workflow that is triggered by a flight event, say a delay. When the component receives the delay message, it might first retrieve the manifest for the flight by sending a request to a manifest service, then render a message telling each passenger about the delay by sending a render request to renderer, then finally send that message by email by publishing an email request. It would typically implement business rules describing when a delay is considered important etc.

By separating workflow from services, we can flexibly implement customer requirements by creating custom workflows without having to customise our services. We can deliver bespoke customer solutions on a common product platform.

We call these workflow pieces, ‘Sagas’, this is a commonly used term in the industry for a long-running business process. Because sagas all need a common infrastructure for hosting, EasyNetQ includes a ‘SagaHost’. SagaHost is a Windows service that hosts sagas, just like it says on the box. This means that the sagas themselves are written as simple assemblies that can be xcopy deployed.

Sagas will usually require a database to store their state. Once again, this should be saga specific and not a database shared by other services. However a single customer workflow might well consist of several distinct sagas, it makes sense for these to be thought of as a unit. These may well share a single database.

Endpoints

Endpoints are components that communicate with the outside world. They are a bridge between our internal AMQP messaging infrastructure and our external HTTP API. The only way into and out of our product should be via this API. We want to be able to integrate with diverse customer systems, but these integration pieces should be implemented as bridges between the customer system and our official API, rather than as bespoke pieces that publish or subscribe directly to the message bus.

Endpoints come in two flavours, externally triggered and internally triggered. An externally triggered endpoint is where communication is initiated by the customer. An example of this would be flight event. These components are best implemented as web services that simply wait to be called and then publish an appropriate message using EasyNetQ.

An internally triggered endpoint is where communication is triggered by an internal event. An example of this would be the completion of a workflow with the final step being an update of a customer system. The API would be implemented as a Windows Service that subscribes to the update message using EasyNetQ and implements an HTTP client that makes a web service request to a configured endpoint.

The Importance of Testability

A core requirement for any component is that it should be testable. It should be possible to test Services and Workflow (Sagas) simply by sending them messages and checking that they respond with the correct messages. Because ‘back-channel’ communication, especially via shared databases, is not allowed we can treat these components as black-boxes that always respond with the same output to the same input.

Endpoints are slightly more complicated to test. It should be possible to send an externally triggered endpoint a request and watch for the message that’s published. An internally triggered endpoint should make a web request when it receives the correct message.

Developers should provide automated tests to QA for any modification to a component.