It’s really nice if you can decouple your external API from the details of application segregation and deployment.

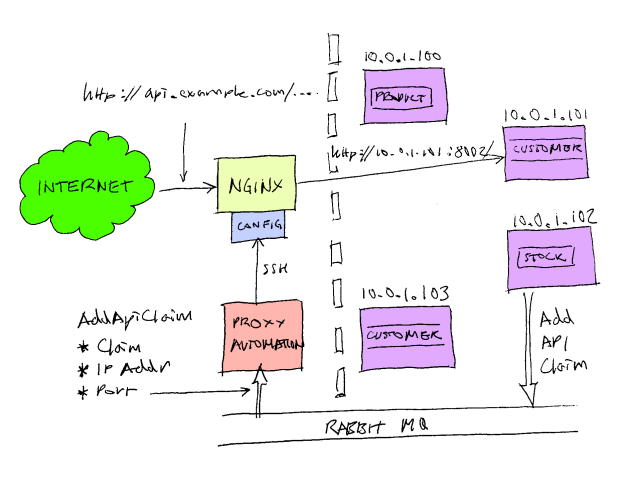

In a previous post I explained some of the benefits of using a reverse proxy. On my current project we’ve building a distributed service oriented architecture that also exposes an HTTP API, and we’re using a reverse proxy to route requests addressed to our API to individual components. We have chosen the excellent Nginx web server to serve as our reverse proxy; it’s fast, reliable and easy to configure. We use it to aggregate multiple services exposing HTTP APIs into a single URL space. So, for example, when you type:

http://api.example.com/product/pinstripe_suit

It gets routed to:

http://10.0.1.101:8001/product/pinstripe_suit

But when you go to:

http://api.example.com/customer/103474783

It gets routed to

http://10.0.1.104:8003/customer/103474783

To the consumer of the API it appears that they are exploring a single URL space (http://api.example.com/blah/blah), but behind the scenes the different top level segments of the URL route to different back end servers. /product/… routes to 10.0.1.101:8001, but /customer/… routes to 10.0.1.104:8003.

We also want this to be self-configuring. So, say I want to create a new component of the system that records stock levels. Rather than extending an existing component, I want to be able to write a stand-alone executable or service that exposes an HTTP endpoint, have it be automatically deployed to one of the hosts in my cloud infrastructure, and have Nginx automatically route requests addressed http://api.example.com/stock/whatever to my new component.

We also want to load balance these back end services. We might want to deploy several instances of our new stock API and have Nginx automatically round robin between them.

We call each top level segment ( /stock, /product, /customer ) a claim. A component publishes an ‘AddApiClaim’ message over RabbitMQ when it comes on line. This message has 3 fields: ‘Claim', ‘ipAddress’, and ‘PortNumber’. We have a special component, ProxyAutomation, that subscribes to these messages and rewrites the Nginx configuration as required. It uses SSH and SCP to log into the Nginx server, transfer the various configuration files, and instruct Nginx to reload its configuration. We use the excellent SSH.NET library to automate this.

A really nice thing about Nginx configuration is wildcard includes. Take a look at our top level configuration file:

1: ...

2:

3: http {

4: include /etc/nginx/mime.types;

5: default_type application/octet-stream;

6:

7: log_format main '$remote_addr - $remote_user [$time_local] "$request" '

8: '$status $body_bytes_sent "$http_referer" '

9: '"$http_user_agent" "$http_x_forwarded_for"';

10:

11: access_log /var/log/nginx/access.log main;

12:

13: sendfile on;

14: keepalive_timeout 65;

15:

16: include /etc/nginx/conf.d/*.conf;

17: }

Line 16 says, take any *.conf file in the conf.d directory and add it here.

Inside conf.d is a single file for all api.example.com requests:

1: include /etc/nginx/conf.d/api.example.com.conf.d/upstream.*.conf;

2:

3: server {

4: listen 80;

5: server_name api.example.com;

6:

7: include /etc/nginx/conf.d/api.example.com.conf.d/location.*.conf;

8:

9: location / {

10: root /usr/share/nginx/api.example.com;

11: index index.html index.htm;

12: }

13: }

This is basically saying listen on port 80 for any requests with a host header ‘api.example.com’.

This has two includes. The first one at line 1, I’ll talk about later. At line 7 it says ‘take any file named location.*.conf in the subdirectory ‘api.example.com.conf.d’ and add it to the configuration. Our proxy automation component adds new components (AKA API claims) by dropping new location.*.conf files in this directory. For example, for our stock component it might create a file, ‘location.stock.conf’, like this:

1: location /stock/ {

2: proxy_pass http://stock;

3: }

This simply tells Nginx to proxy all requests addressed to api.example.com/stock/… to the upstream servers defined at ‘stock’. This is where the other include mentioned above comes in, ‘upstream.*.conf’. The proxy automation component also drops in a file named upstream.stock.conf that looks something like this:

1: upstream stock {

2: server 10.0.0.23:8001;

3: server 10.0.0.23:8002;

4: }

This tells Nginx to round-robin all requests to api.example.com/stock/ to the given sockets. In this example it’s two components on the same machine (10.0.0.23), one on port 8001 and the other on port 8002.

As instances of the stock component get deployed, new entries are added to upstream.stock.conf. Similarly, when components get uninstalled, the entry is removed. When the last entry is removed, the whole file is also deleted.

This infrastructure allows us to decouple infrastructure configuration from component deployment. We can scale the application up and down by simply adding new component instances as required. As a component developer, I don’t need to do any proxy configuration, just make sure my component publishes add and remove API claim messages and I’m good to go.

No comments:

Post a Comment