TL:DR Successive, well intentioned, changes to architecture and technology throughout the lifetime of an application can lead to a fragmented and hard to maintain code base. Sometimes it is better to favour consistent legacy technology over fragmentation.

An ‘anti-pattern’ describes a commonly encountered pathology or problem in software development. The Lava Layer (or Lava Flow) anti-pattern is well documented (here and here for example). It’s symptoms are a fragile and poorly understood codebase with a variety of different patterns and technologies used to solve the same problems in different places. I’ve seen this pattern many times in enterprise software. It’s especially prevalent in situations where the software is large, mission critical, long-lived and where there is high staff turn-over. In this post I want to show some of the ways that it occurs and how it’s often driven by a very human desire to improve the software.

To illustrate I’m going to tell a story about a fictional piece of software in a fictional organisation with made up characters, but closely based on real examples I’ve witnessed. In fact, if I’m honest, I’ve been several of these characters at different stages of my career. I’m going to concentrate on the data-access layer (DAL) technology and design to keep the story simple, but the general principles and scenario can and do apply to any part of the software stack.

Let’s set the scene…

The Royal Churchill is a large hospital in southern England. It has a sizable in-house software team that develop and maintain a suite of applications that support the hospital’s operations. One of these is WidgetFinder, a physical asset management application that is used to track the hospital’s large collection of physical assets; everything from beds to CT scanners. Development on WidgetFinder was started in 2005. The software team that wrote version 1 was lead by Laurence Martell, an developer with may years experience building client server systems based on VB/SQL Server. VB was in the process of being retired by Microsoft, so Laurence decided to build WidgetFinder with the relatively new ASP.NET platform. He read various Microsoft design guideline papers and a couple of books and decided to architect the DAL around the ADO.NET RecordSet. He and his team hand coded the DAL and exposed DataSets directly to the UI layer, as was demonstrated in the Microsoft sample applications. After seven months of development and testing, Version 1 of WidgetFinder was released and soon became central to the Royal Churchill’s operations. Indeed, several other systems, including auditing and financial applications, soon had code that directly accessed WidgetFinders database.

Like any successful enterprise application, a new list of requirements and extensions evolved and budget was assigned for version 2. Work started in 2008. Laurence had left and a new lead developer had been appointed. His name was Bruce Snider. Bruce came from a Java background and was critical of many of Laurence’s design choices. He was especially scornful of the use of DataSets: “an un-typed bag of data, just waiting for a runtime error with all those string indexed columns.” Indeed WidgetFinder did seem to suffer from those kinds of errors. “We need a proper object-oriented model with C# classes representing tables, such as Asset and Location. We can code gen most of the DAL straight from the relational schema.” He asked for time and budget to rewrite WidgetFinder from scratch, but this was rejected by the management. Why would they want to re-write a two year old application that was, as far as they were concerned, successfully doing its job? There was also the problem that many other systems relied on WidgetFinder’s database and they would need to be re-written too.

Bruce decided to write the new features of WidgetFinder using his OO/Code Gen approach and refactor any parts of the application that they had to touch as part of version 2. He was confident that in time his Code Gen DAL would eventually replace the hand crafted DataSet code. Version 2 was released a few months later. Simon, a new recruit on the team asked why some of the DAL was code generated, and some of it hand-coded. It was explained that there had been this guy called Lawrence who had no idea about software, but he was long gone.

A couple of years went by. Bruce moved on and was replaced by Ina Powers. The code gen system had somewhat broken down after Bruce had left. None of the remaining team really understood how it worked, so it was easier just to modify the code by hand. Ina found the code confusing and difficult to reason about. “Why are we hand-coding the DAL in this way? This code is so repetitive, it looks like it was written by an automation. Half of it uses DataSets and the other some half baked Active Record pattern. Who wrote this crap? If you hand code your DAL, you are stealing from your employer. The only sensible solution is an ORM. I recommend that we re-write the system using a proper domain model and NHibernate.” Again the business rejected a rewrite. “No problem, we will adopt an evolutionary approach: write all the new code DDD/NHibernate style, and progressively refactor the existing code as we touch it.” Many months later, Version 3 was released.

Mandy was a new hire. She’d listened to Ina’s description of how the application was architected around DDD with the data access handled by NHibernate, so she was surprised and confused to come across some code using DataSets. She asked Simon what to do. “Yeah, I think that code was written by some guy who was here before me. I don’t really know what it does. Best not to touch it in case something breaks.”

Ina, frustrated by management who didn’t understand the difficulty of maintaining such horrible legacy applications, left for a start-up where she would be able to build software from scratch. She was replaced by Gordy Bannerman who had years of experience building large scale applications. The WidgetFinder users were complaining about it’s performance. Some of the pages took 30 seconds or more to appear. Looking at the code horrified him: Huge Linq statements generating hundreds of individual SQL requests, no wonder it was slow. Who wrote this crap? “ORMs are a horrible leaky abstraction with all kinds of performance problems. We should use a lightweight data-access technology like Dapper. Look at Stack-Overflow, they use it. They also use only static methods for performance, we should do the same.” And so the cycle repeated itself. Version 4 was released a year later. It was buggier than the previous versions. Gordy had dismissed Ina’s love of unit testing. It’s hard to unit test code written mostly with static methods.

Mandy left to be replaced by Peter. Simon introduced him to the WidgetFinder code. “It’s not pretty. A lot of different things have been tried over the years and you’ll find several different ways of doing the same thing depending on where you look. I don’t argue, just get on with trawling through the never ending bug list. Hey, at least it’s a job.”

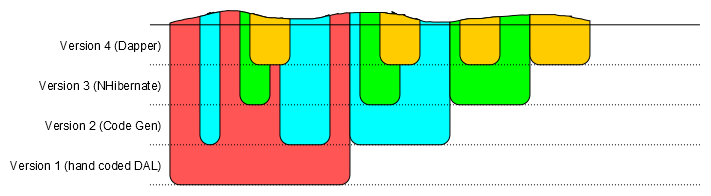

This is a graphical representation of the DAL code over time. The Y-axis shows the version of the software. It starts with version one at the bottom and ends with version four at the top. The X-axis shows features, the older ones to the left and the newer ones to the right. Each technology choice is coloured differently. red is the hand-coded RecordSet DAL, blue the Active Record code gen, green DDD/NHibernate and Yellow is Dapper/Static methods.

Each new design and technology choice never completely replaced the one that went before. The application has archaeological layers revealing it’s history and the different technological fashions taken up successively by Laurence, Bruce, Ina and Gordy. If you look along the Version 4 line, you can see that there are four different ways of doing the same thing scattered throughout the code base.

Each successive lead developer acted in good faith. They genuinely wanted to improve the application and believed that they were using the best design and technology to solve the problem at hand. Each wanted to re-write the application rather than maintain it, but the business owners would not allow them the resources to do it. Why should they when there didn’t seem to be any rational business reason for doing so? High staff turnover exacerbated the problem. The design philosophy of each layer was not effectively communicated to the next generation of developers. There was no consistent architectural strategy. Without exposition or explanation, code standing alone needs a very sympathetic interpreter to understand its motivations.

So how should one mitigate against Lava Layer? How can we approach legacy application development in a way that keeps the code consistent and well architected? A first step would be a little self awareness.

We developers should recognise that we suffer from a number of quite harmful pathologies when dealing with legacy code:

- We are highly (and often overly) critical of older patterns and technologies. “You’re not using a relational database?!? NoSQL is far far better!” “I can’t believe this uses XML! So verbose! JSON would have been a much better choice.”

- We think that the current shiny best way is the end of history; that it will never be superseded or seen to be suspect with hindsight.

- We absolutely must ritually rubbish whoever came before us. Better still if they are no longer around to defend themselves. There’s a brilliant Dilbert cartoon for this.

- We despise working on legacy code and will do almost anything to carve something greenfield out of an assignment, even if it makes no sense within the existing architecture.

- Rather than try to understand legacy code, how it works and the motivations that created it, we throw up our hands in despair and declare that the whole thing needs to be rewritten.

If you find yourself suggesting a radical change to an existing application, especially if you use the argument that, “we will refactor it to the new pattern over time.” Consider that you may never complete that refactoring, and think about what the application will look like with two different ways of doing the same thing. Will this aid those coming after you, or hinder them? What happens if your way turns out to be sub-optimal? Will replacing it be easy? Or would it have been better to leave the older, but more consistent code in place? Is WidgetFinder better for having four entirely separate ways of getting data from the database to the UI, or would it have been easier to understand and maintain with one? Try and have some sympathy and understanding for those who came before you. There was probably a good reason for why things were done the way they were. Be especially sympathetic to consistency, even if you don’t necessarily agree with the design or technology choices.