If you are programming against a web service, the natural pattern is request-response. It’s always initiated by the client, which then waits for a response from the server. It’s great if the client wants to send some information to a server, or request some information based on some criteria. It’s not so useful if the server wants to initiate the send of some information to the client. There we have to rely on somewhat extended HTTP tricks like long-polling or web-hooks.

With messaging systems, the natural pattern is send-receive. A producer node publishes a message which is then passed to a consuming node. There is no real concept of client or server; a node can be a producer, a consumer, or both. This works very well when one node wants to send some information to another or vice-versa, but isn’t so useful if one node wants to request information from another based on some criteria.

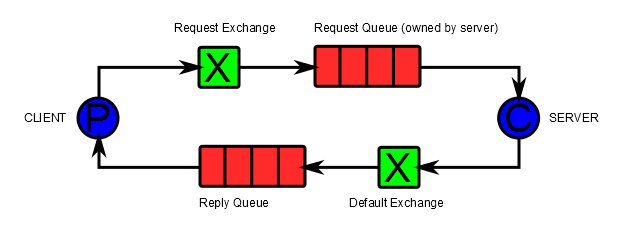

All is not lost though. We can model request-response by having the client node create a reply queue for the response to a query message it sends to the server. The client can set the request message properties’ reply_to field with the reply queue name. The server inspects the reply_to field and publishes the reply to the reply queue via the default exchange, which is then consumed by the client.

The implementation is simple on the request side, it looks just like a standard send-receive. But on the reply side we have some choices to make. If you Google for ‘RabbitMQ RPC’, or ‘RabbitMQ request response’, you will find several different opinions concerning the nature of the reply queue.

- Should there be a reply queue per request, or should the client maintain a single reply queue for multiple requests?

- Should the reply queue be exclusive, only available to this channel, or not? Note that an exclusive queue will be deleted when the channel is closed, either intentionally, or if there is a network or broker failure that causes the connection to be lost.

Let’s have a look at the pros and cons of these choices.

Exclusive reply queue per request.

Here each request creates a reply queue. The benefits are that it is simple to implement. There is no problem with correlating the response with the request, since each request has its own response consumer. If the connection between the client and the broker fails before a response is received, the broker will dispose of any remaining reply queues and the response message will be lost.

The main implementation issue is that we need to clean up any replies queues in the event that a problem with the server means that it never publishes the response.

This pattern has a performance cost because a new queue and consumer has to be created for each request.

Exclusive reply queue per client

Here each client connection maintains a reply queue which many requests can share. This avoids the performance cost of creating a queue and consumer per request, but adds the overhead that the client needs to keep track of the reply queue and match up responses with their respective requests. The standard way of doing this is with a correlation id that is copied by the server from the request to the response.

Once again, there is no problem with deleting the reply queue when the client disconnects because the broker will do this automatically. It does mean that any responses that are in-flight at the time of a disconnection will be lost.

Durable reply queue

Both the options above have the problem that the response message can be lost if the connection between the client and broker goes down while the response is in flight. This is because they use exclusive queues that are deleted by the broker when the connection that owns them is closed.

The natural answer to this is to use a non-exclusive reply queue. However this creates some management overhead. You need some way to name the reply queue and associate it with a particular client. The problem is that it’s difficult for the client to know if any one reply queue belongs to itself, or to another instance. It’s easy to naively create a situation where responses are being delivered to the wrong instance of the client. You will probably wind up manually creating and naming response queues, which removes one of the main benefits of choosing broker based messaging in the first place.

EasyNetQ

For a high-level re-useable library like EasyNetQ the durable reply queue option is out of the question. There is no sensible way of knowing whether a particular instance of the library belongs to a single logical instance of a client application. By ‘logical instance’ I mean an instance that might have been stopped and started, as opposed to two separate instances of the same client.

Instead we have to use exclusive queues and accept the occasional loss of response messages. It is essential to implement a timeout, so that an exception can be raised to the client application in the event of response loss. Ideally the client will catch the exception and re-try the message if appropriate.

Currently EasyNetQ implements the ‘reply queue per request’ pattern, but I’m planning to change it to a ‘reply queue per client’. The overhead of matching up responses to requests is not too onerous, and it is both more efficient and easier to manage.

I’d be very interested in hearing other people’s experiences in implementing request-response with RabbitMQ.

How do you get around the issues of firewalls etc for getting messages back to the client? What's the actual transport over t'internet? The main reason Signalr has gained so much traction is because it works over HTTP port 80. To use a queuing system, you have firewalls, external IP addresses vs. internal IP addresses (i.e. port forwarding etc). Do those issues still apply?

ReplyDelete@Neil

ReplyDeleteTCP connections are iniated from the client side and kept alive. Responses are sent back from the broker on the same connection.

This means that you only need a FW rule to allow egress traffic from the client (which often isn't required at all; many systems are setup to allow all egress traffic).

We are in the process of implementing something similar with the added complexity that the 'client' is a web application that is being polled at regular intervals for new data that can take a significant period to return from the 'server'. In addition, we would like the polling to be load balanced across a pool of stateless web apps. This means we can't really have a long-lived client connection.

ReplyDeleteOur proposed approach is to use a queue per 'user session' (i.e. a sequence of polling requests that are correlated with a single web app user and 1 or more pending request messages) that will auto-delete (x-expires) after a timeout period. This means that client connections can be created and dropped within a polling request and still connect to the same reply queue. Similarly, we can guarantee that the reply queue will be available for the 'server' to publish to for the diuration of the session. We still need to verify with this will work at scale (we estimate that there will be in the region of 1000 active queues at peak load).

http://www.rabbitmq.com/ttl.html#queue-ttl

Thanks for sharing. Glad to know I'm not the only one wondering which approach to take!

ReplyDeleteI'm trying to avoid RPC as I'm not looking for data, but would like to get a simple job-completion status back, to update an upstream legacy system which originally raised the jobs.

From an MQ noob's perspective, it seems a shame you can't send a response code back with the ACK, rather than having to bolt on an entire separate reverse channel.

Can someone shed some light on why the response queue is unique to the instance? It is because the assumption here is that the request is stateful? What if I have a pool of workers that watch the response queue and any one of them can process the response? I assume the response queue could then be shared?

ReplyDeleteThoughts welcome - thx!