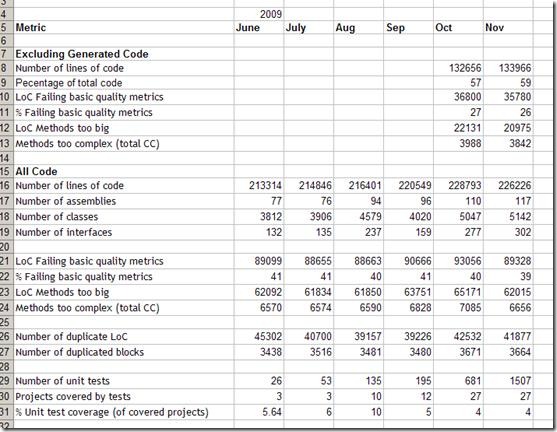

Since I started my new ‘architect’ (no, I do write code… sometimes) role earlier this year, I’ve been doing a ‘monthly code quality report’. This uses various tools to give an overview of our current codebase. The output looks something like this:

Most of the metrics come from NDepend, a fantastic tool if you haven’t come across it before. Check out the author, Patrick Smacchia’s, blog.

We have lots of generated code, so we obviously want to differentiate between that and the hand written stuff. Doing this is really easy using CQL (Code Query Language), a kind of code-SQL. Here’s the CQL expression for ‘LoC Failing basic quality metrics’:

WARN IF Count > 0 IN SELECT METHODS /*OUT OF "YourGeneratedCode" */ WHERE ( NbLinesOfCode > 30 OR NbILInstructions > 200 OR CyclomaticComplexity > 20 OR ILCyclomaticComplexity > 50 OR ILNestingDepth > 4 OR NbParameters > 5 OR NbVariables > 8 OR NbOverloads > 6 ) AND !( NameIs "InitializeComponent()" OR HasAttribute "XXX.Framework.GeneratedCodeAttribute" OR FullNameLike "XXX.TheProject.Shredder" )

Here I’m looking for overly complex code and excluding anything that is attributed with our GeneratedCodeAttribute, I’m also excluding a project called ‘Shredder’ which is entirely generated.

NDepend’s dependency analysis is legendary and also well worth a look, but that’s another blog post entirely.

The duplicate code metrics are provided by Simian, a simple command line tool that trolls through your source code looking for repetitive lines. I set the threshold at 6 lines of code (the default). It actually outputs a complete list of all the duplications it finds and it’s nice to be able to run it regularly, put the output under source control, and then diff versions to see where duplication is being introduced. A great way of fighting the copy-and-paste code reuse pattern.

The unit test metrics come straight out of NCover. Since there were no unit tests when I joined the team, it’s not really surprising how low the level of coverage is. The fact that we’ve been able to ramp up the number of tests quite quickly is satisfying though.

As you can see from the sample output, it’s a pretty cruddy old codebase where 27% of the code fails basic, very conservative, quality checks. Some of the worst offending methods would make great entries in ‘the daily WTF’. But in my experience, working in a lot of corporate .NET development shops, this is not unusual; if anything it’s a little better than average.

Since I joined the team, I’ve been very keen on promoting software quality. There hadn’t been any emphasis on this before I joined, and that’s reflected by the poor quality of the codebase. I should also emphasise that these metrics are probably the least important of several things you should do to encourage quality. Certainly less important than code reviews, leading by example and periodic training sessions. Indeed, the metrics by themselves are pretty meaningless and it’s easy to game the results, but simply having some visibility on things like repeated code and overly complex methods makes the point that we care about such things.

I was worried at first that it would be negatively received, but in fact the opposite seems to be the case. Everyone wants to do a good job and I think we all value software quality, it’s just that it’s sometimes hard for developers (especially junior developers) to know the kinds of things you should be doing to achieve it. Having this kind of steer with real numbers to back it up can be very encouraging.

Lastly I take the five methods with the largest cyclometric complexity and present them as a top 5 ‘Crap Code of the Month’. You get much kudos for refactoring one of these :)

Thanks for sharing this. I really hope more people will care about the internal quality of software. There's so much waste...

ReplyDeleteWe came up with something surprisingly similar for Java. You might be interested in seeing how we presented the quality problems in a column chart.

http://erik.doernenburg.com/2008/11/how-toxic-is-your-code/

Thanks Erik, I really liked your Toxicity Chart. It looks a little like some of the graphics that NDepend creates. It would be interesting to take CQL results and graph them for a similar effect.

ReplyDelete